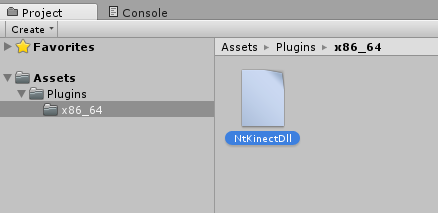

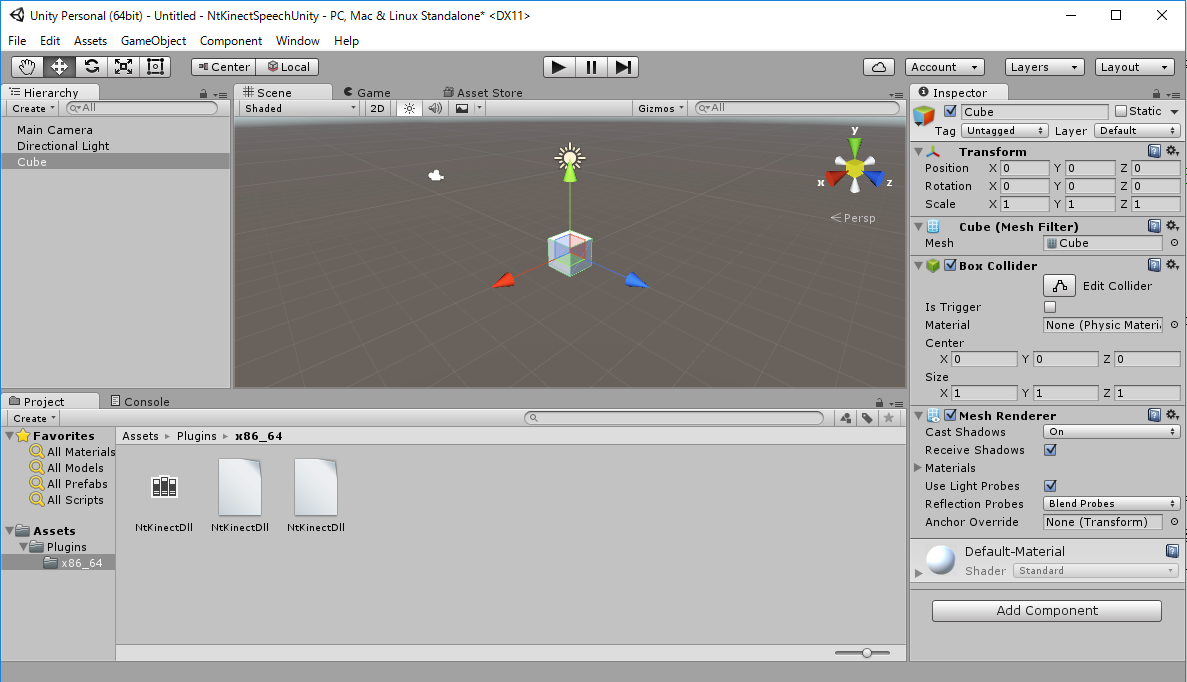

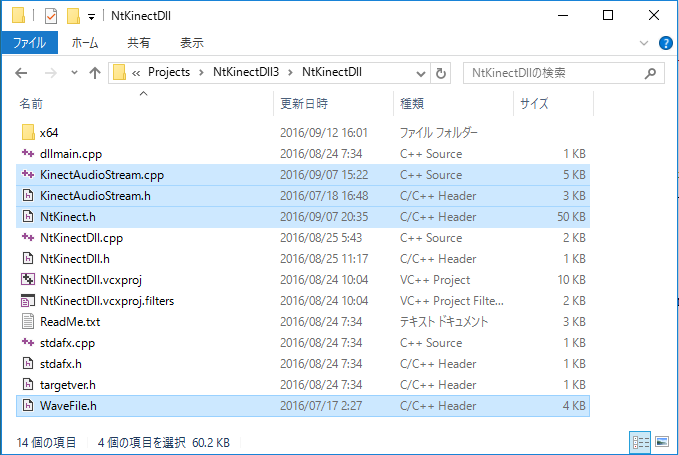

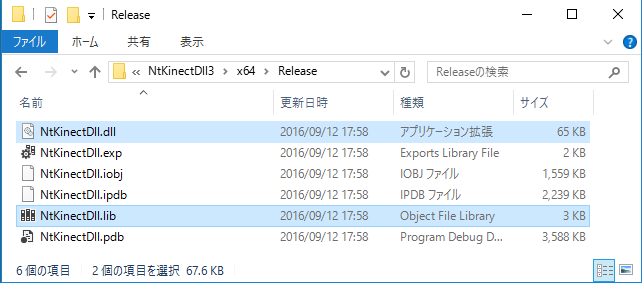

It should have the following file structure. There may be some folders and files other than those listed, but ignore them now.

NtKinectDll3/NtKinectDll.sln

x64/Release/

NtKinectDll/dllmain.cpp

NtKiect.h

NtKiectDll.h

NtKiectDll.cpp

stdafx.cpp

stdafx.h

targetver.h

|

Copy KinectAudioStream.cpp , KinectAudioStream.h , and WaveFile.h to the folder where the project source files are located , NtKinectDll3/NtKinectDll/ in this example.

[Notice] KinectAudioStream.cpp is slightly modified to include stdafx.h.The list of the folder is as follows.

NtKinectDll3/NtKinectDll.sln

x64/Release/

NtKinectDll/dllmain.cpp

NtKiect.h

NtKiectDll.h

NtKiectDll.cpp

stdafx.cpp

stdafx.h

targetver.h

KinectAudioStream.cpp

KinectAudioStream.h

WaveFile.h

|

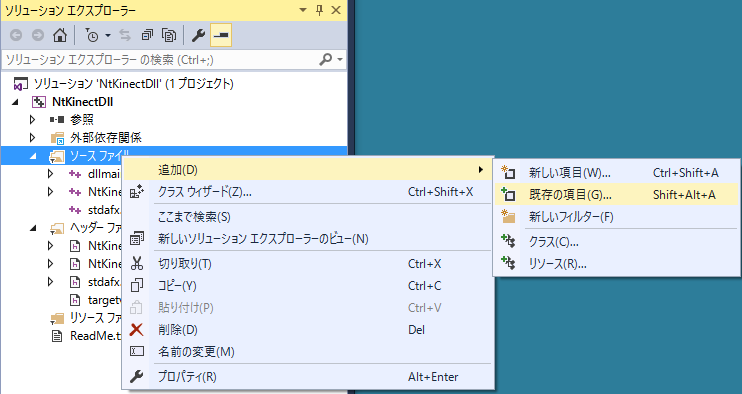

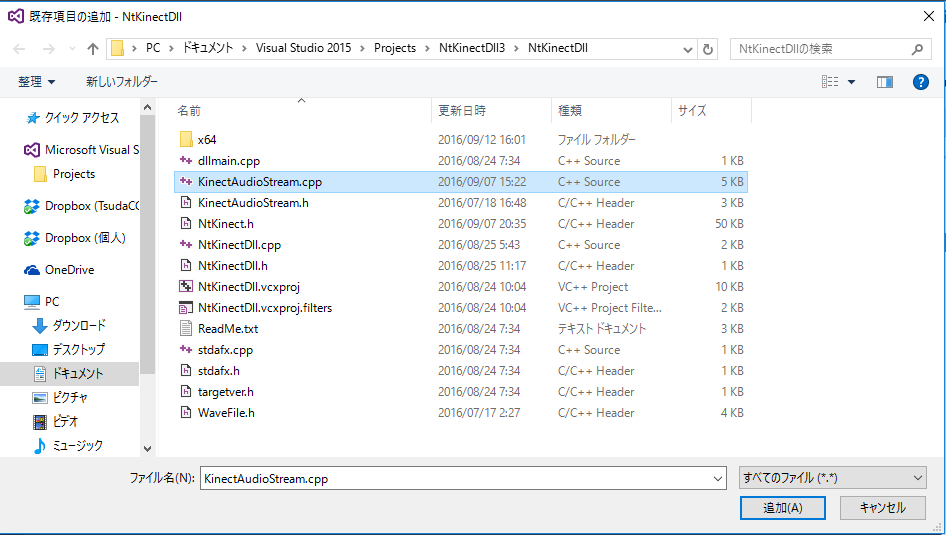

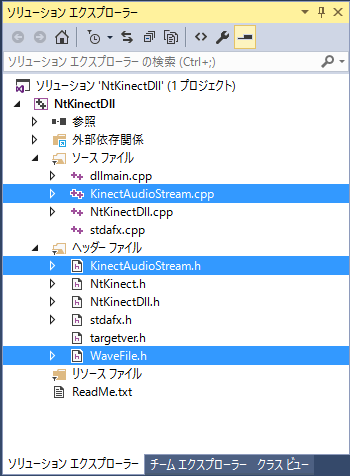

- Add KinectAudioStream.cpp to the project's "Source Files".

- Add KinectAudioStream.h and WaveFile.h to the project's "header file".

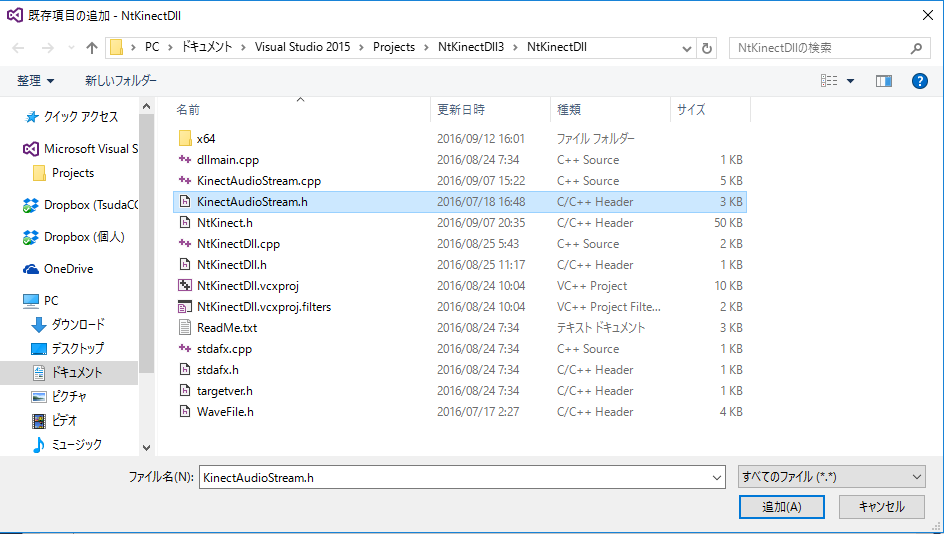

"Solution Explorer" -> Right-click over the "Source Files" -> "Add" -> "Add existing item" -> select "KinectAudioStream.cpp"

"Solution Explorer"-> Right-click over the "Header File" -> "Add" -> "Add existing item" -> select "KinectAudioStream.h"

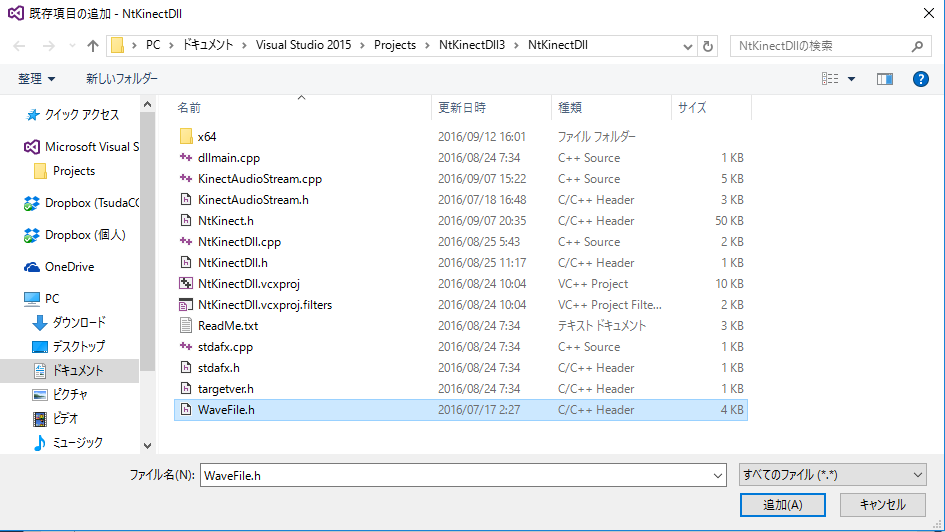

"Solution Explorer" -> Right-click over "header file" -> 「追加」 -> 「既存の項目の追加」 -> WaveFile.h を選択する

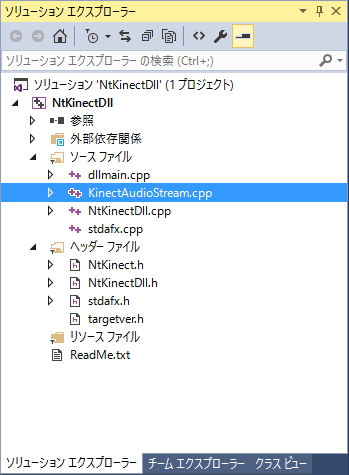

Three files have been added to the project. NtKinect.h has been added to the project from the beginning.

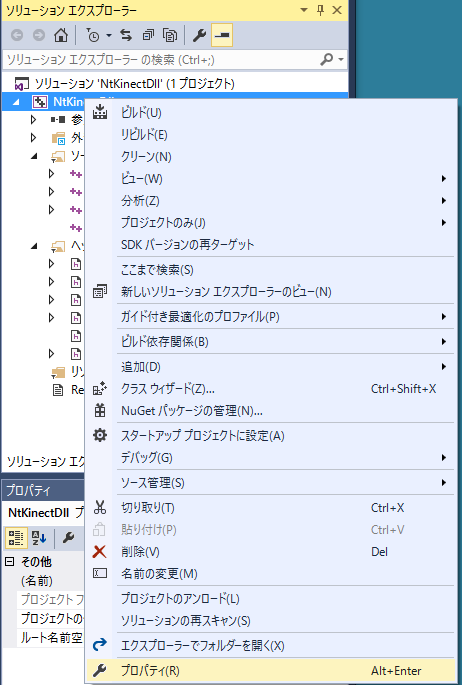

- In the Solution Explorer drag right over the project name and select "Properties".

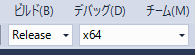

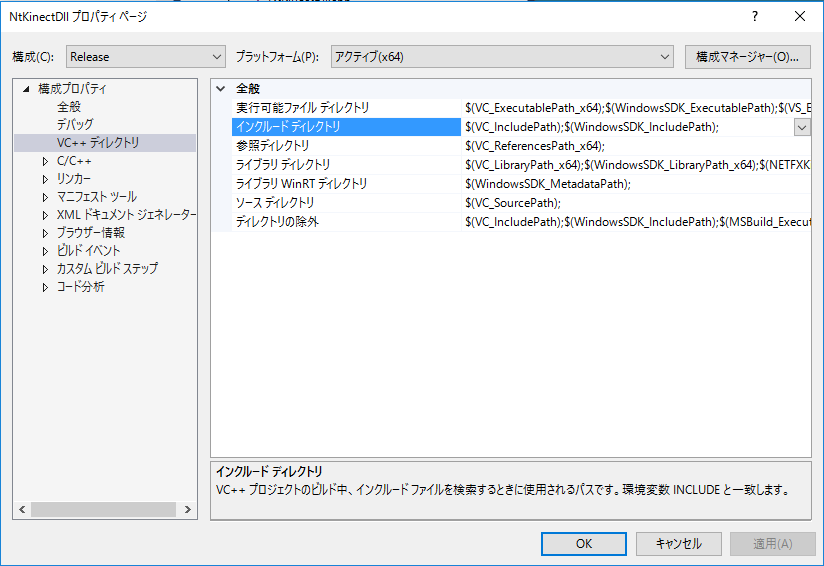

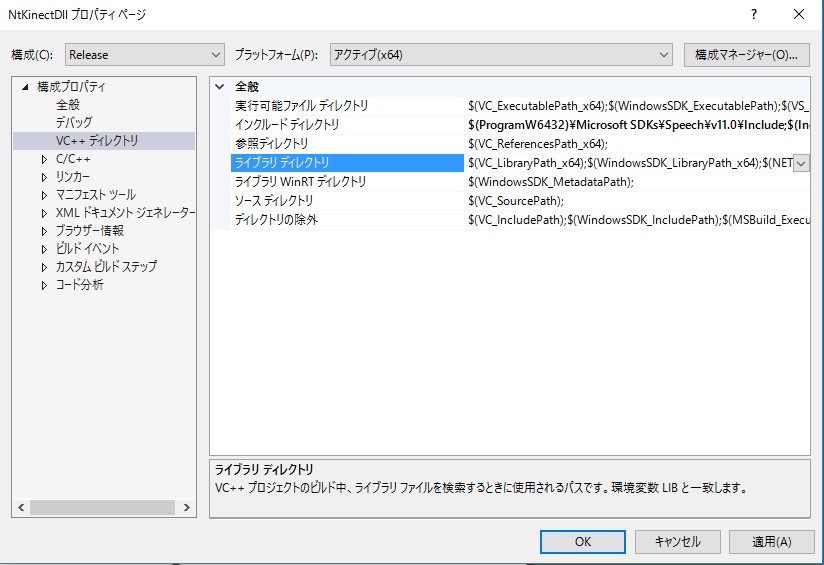

- Make settings at the state of Configuration: "All Configuration", Platform: "Acvive (x64)". By doing this, you can configure "Debug" and "Release" mode at the same time. Of course, you can change the settings separately.

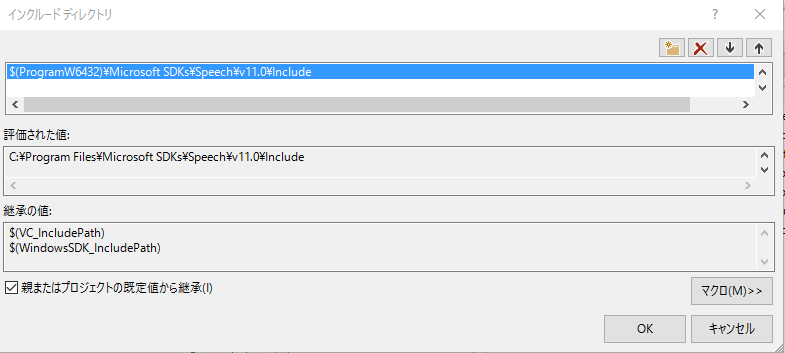

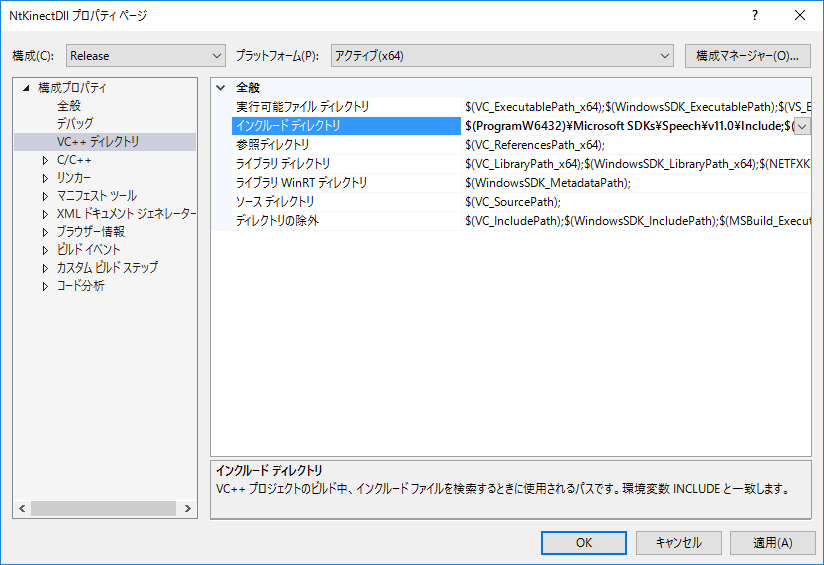

- Add the location of the include file. Even if a header file with the same name exists in another place, make sure to load the correct file.

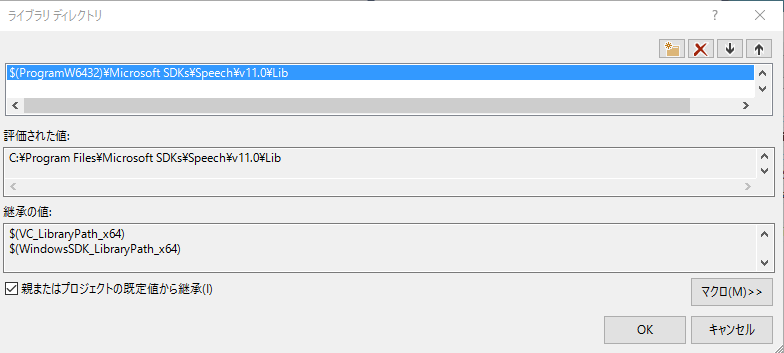

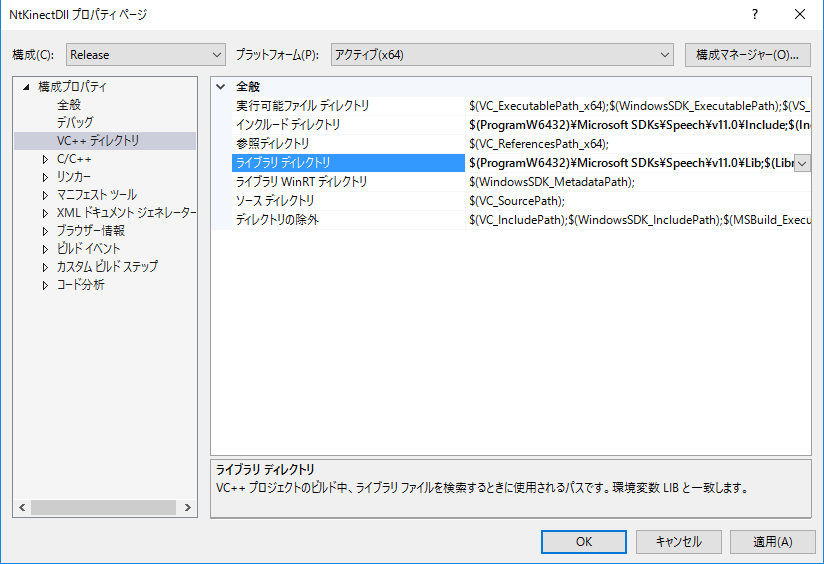

- Add the location of the library.

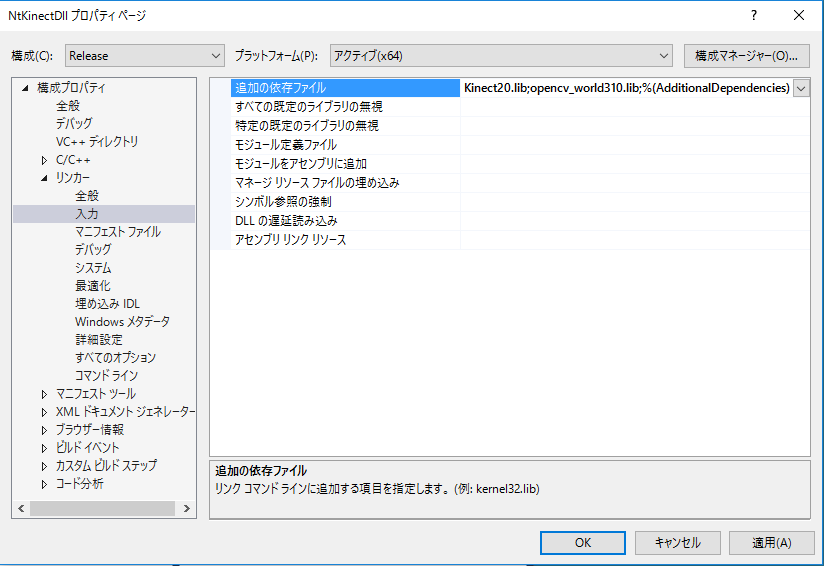

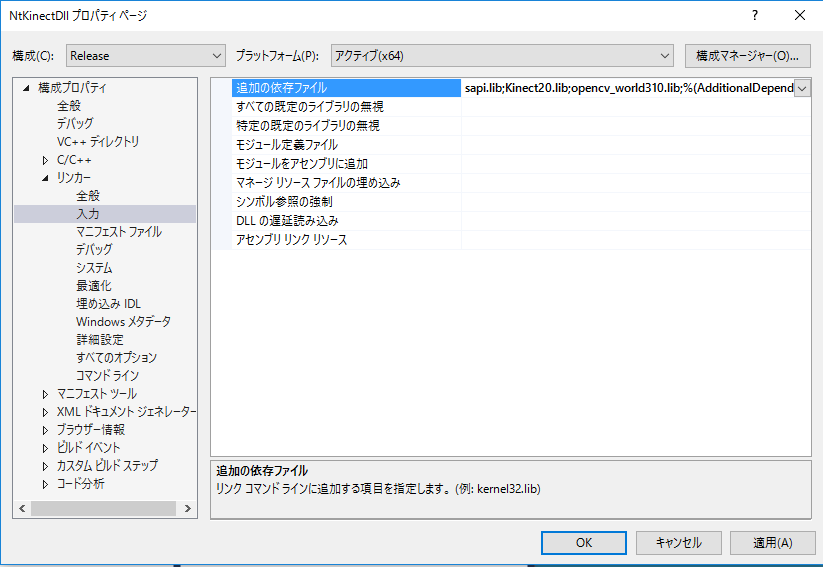

- Add the library sap.lib just in case. (It seems to work without adding sapi.lib here.)

"Configuration Properties" -> "VC++ directory" -> "Include directory" -> Add

$(ProgramW6432)\Microsoft SDKs\Speech\V11.0\Includeto the first place.

"Configuration Properties" -> "VC++ directory" -> "Library directory" -> Add

$(ProgramW6432)\Microsoft SDKs\Speech\V11.0\Libto the first place.

"Configuration Properties" -> "Linker" -> "General" -> "Input" -> Add.

sapi.lib

The following two libraries should already be set up, but please confirm.

Kinect20.lib opencv_world310.lib

The green letter part is related to import/export of the DLL defined since the project was created. When NtKinectDll.h is loaded, it becomes a declaration for export within this project and it becomes a declaration for import in other projects.

The blue letter part is related to the functions defined by ourselves. It is called mangling that the function name is changed by the C++ compiler to the name including return value type and argument type. In forder to avoid mangling c++ function names, declare function prototype in extern "C" {}. This makes it possible to use this DLL from other languages.

In order to avoid name conflicts, we define a namespace NtKinectSpeech and declare the function prototypes and variables.

Speech recognition is always done in another thread, and the recognized result is saved in the speechQueue . When accessing the queue, mutex is used for exclusive control of the thread.

| NtKinectDll.h |

#ifdef NTKINECTDLL_EXPORTS

#define NTKINECTDLL_API __declspec(dllexport)

#else

#define NTKINECTDLL_API __declspec(dllimport)

#endif

#include <mutex>

#include <list>

#include <thread>

namespace NtKinectSpeech {

extern "C" {

NTKINECTDLL_API void* getKinect(void);

NTKINECTDLL_API void initSpeech(void* kinect);

NTKINECTDLL_API void setSpeechLang(void* kinect,wchar_t*,wchar_t*);

NTKINECTDLL_API int speechQueueSize(void* kinect);

NTKINECTDLL_API int getSpeech(void* kinect,wchar_t*& tagPtr,wchar_t*& itemPtr);

NTKINECTDLL_API void destroySpeech(void* kinect);

}

std::mutex mutex;

std::thread* speechThread;

std::list<std::pair<std::wstring,std::wstring>> speechQueue;

bool speechActive;

#define SPEECH_MAX_LENGTH 1024

wchar_t tagBuffer[SPEECH_MAX_LENGTH];

wchar_t itemBuffer[SPEECH_MAX_LENGTH];

}

|

"NTKINECTDLL_API" must be written at the beginning of the function declaration. This is a macro defined in NtKinectDll.h to facilitate export/import from DLL.

In the DLL, the object must be allocated in the heap. For this reason, the void *getKinect() function allocates NtKinect instance in the heap memory and returns the casted pointer to it.

When executing a function of the DLL, the pointer to the NtKinect Object is given as an argument of type (void *). We cast it to a pointer of (NtKinect *) type and use NtKinect's function via it. For example, access to a member function "acquire()" is described as (*kinect).acquire().

On Unity (C#) side, the data is managed and may be moved by Gabage Collector. Be careful to exchange data between C# and C++.

The first argument's of NtKinect's setSpeechLang() function is string type, and the second argument is wstrnig type. When a string of Unity (C#) is passed to the DLL (C++) function, its type is WideCharacter (UTF16) and it is used as wstring (UTF16) directly in DLL (C++) side. When you need string type data, you must convert it from wstring (UTF16) to strnig (UTF8) in C++ using WideCharToMultiByte() function. In the definition of void setSpeechLang(void*, wchar_t*, wchar_t*) function in NtKinectDll.cpp, it findsthe number of bytes of the converted characters by the first WideCharToMultiByte() call, convert the characters from UTF16 to UTF8 and write it in langBuffer by the second call.

When passing C++ wstring data to Unity (C#), you need to allocate the area of w_char data on the heap and pass the address to the area. From the getSpeech() function, two wstring, tag and item of the recognized word will be returned. C# will pass the two address reference as arguments to the function, and to the reference C++ write the address to the w_char area in heap memory.

| NtKinectDll.cpp |

#include "stdafx.h" #include "NtKinectDll.h" #define USE_THREAD #define USE_SPEECH #include "NtKinect.h" using namespace std; namespace NtKinectSpeech { NTKINECTDLL_API void* getKinect(void) { NtKinect* kinect = new NtKinect; return static_cast<void*>(kinect); } NTKINECTDLL_API void setSpeechLang(void* ptr, wchar_t* wlang, wchar_t* grxmlBuffer) { NtKinect *kinect = static_cast<NtKinect*>(ptr); if (wlang && grxmlBuffer) { int len = WideCharToMultiByte(CP_UTF8,NULL,wlang,-1,NULL,0,NULL,NULL) + 1; char* langBuffer = new char[len]; memset(langBuffer,'\0',len); WideCharToMultiByte(CP_UTF8,NULL,wlang,-1,langBuffer,len,NULL,NULL); string lang(langBuffer); wstring grxml(grxmlBuffer); (*kinect).acquire(); (*kinect).setSpeechLang(lang,grxml); (*kinect).release(); } } void speechThreadFunc(NtKinect* kinect) { ERROR_CHECK(CoInitializeEx(NULL,COINIT_MULTITHREADED)); (*kinect).acquire(); (*kinect).startSpeech(); (*kinect).release(); while (speechActive) { pair<wstring,wstring> p; bool flag = (*kinect)._setSpeech(p); if (flag) { mutex.lock(); speechQueue.push_back(p); mutex.unlock(); } std::this_thread::sleep_for(std::chrono::milliseconds(10)); } } NTKINECTDLL_API void initSpeech(void* ptr) { NtKinect *kinect = static_cast<NtKinect*>(ptr); speechActive = true; speechThread = new std::thread(NtKinectSpeech::speechThreadFunc, kinect); return; } NTKINECTDLL_API int speechQueueSize(void* ptr) { int n=0; mutex.lock(); n = (int) speechQueue.size(); mutex.unlock(); return n; } NTKINECTDLL_API int getSpeech(void* ptr, wchar_t*& tagPtr, wchar_t*& itemPtr) { NtKinect *kinect = static_cast<NtKinect*>(ptr); wmemset(tagBuffer,'\0',SPEECH_MAX_LENGTH); wmemset(itemBuffer,'\0',SPEECH_MAX_LENGTH); pair<wstring,wstring> p; mutex.lock(); bool empty = speechQueue.empty(); if (! empty) { p = speechQueue.front(); speechQueue.pop_front(); } mutex.unlock(); if (!empty) { wsprintf(tagBuffer,L"%ls",p.first); wsprintf(itemBuffer,L"%ls",p.second); } tagPtr = tagBuffer; itemPtr = itemBuffer; return !empty; } NTKINECTDLL_API void destroySpeech(void* ptr) { speechActive = false; speechThread->join(); NtKinect *kinect = static_cast<NtKinect*>(ptr); (*kinect).acquire(); (*kinect).stopSpeech(); (*kinect).release(); delete speechThread; CoUninitialize(); } } |

[Caution](Oct/07/2017 added) If you encounter "dllimport ..." error when building with Visual Studio 2017 Update 2, please refer to here and deal with it to define NTKINECTDLL_EXPORTS in NtKinectDll.cpp.

Since the above zip file may not include the latest "NtKinect.h", Download the latest version from here and replace old one with it.